The far-right forum 8chan has been shut down amidst an outcry over its racist content. But does suspending hate speech websites work?

8chan was launched six years ago as a spin-off of a similar forum called 4chan, which according to the creator had grown authoritarian by censoring too much content. 8chan, therefore, promised unfettered free speech with no restrictions.

This lack of oversight came under fire several times after it transpired that the forum is used mostly for spreading far-right views, racist comments and even coordinating attacks against people holding opposing political views.

The breaking point for the site came this month after the shooting in El Paso. The shooter had used 8chan to publish racist ideas before killing 22 people, saying “this attack is a response to the Hispanic invasion of Texas.”

The following day the site’s hosting company, Cloudflare, terminated 8chan as their client and stated that “8chan has repeatedly proven itself to be a cesspool of hate.”

Bigger questions to answer

But censoring online content is like skating across thin ice. It is also technically very difficult to do. It only took 8chan a couple of hours to find a new host. Even though the new host swiftly dropped their new client once they realized who they were, the sheer number of hosting possibilities would suggest that this is not a fight that can be won. Should we then even try? In an MIT Technology Review blog post, Angela Chen explains why it just might work:

After a group of tech companies kicked InfoWars founder Alex Jones off their platforms, initial interest in him spiked, but a year later, he had mostly disappeared. One 2017 study found that Reddit’s decision to ban communities like r/fatpeoplehate and r/CoonTown led to less hate speech on the site, says study coauthor Eshwar Chandrasekharan, a PhD candidate at Georgia Tech. The reason: extremely motivated users will follow a community or personality to a new place, but less-engaged members drop off completely.

Similarly, the neo-Nazi site Daily Stormer used to be a central organizing space for the far right. Since it was dropped by web-hosting company GoDaddy two years ago, its influence within the movement has significantly waned, says Becca Lewis, a researcher who studies online political subcultures. “If 8chan stays down, there’s reason to believe that that would have a big impact on what we see in terms of online organizing in the far-right movement,” she adds.

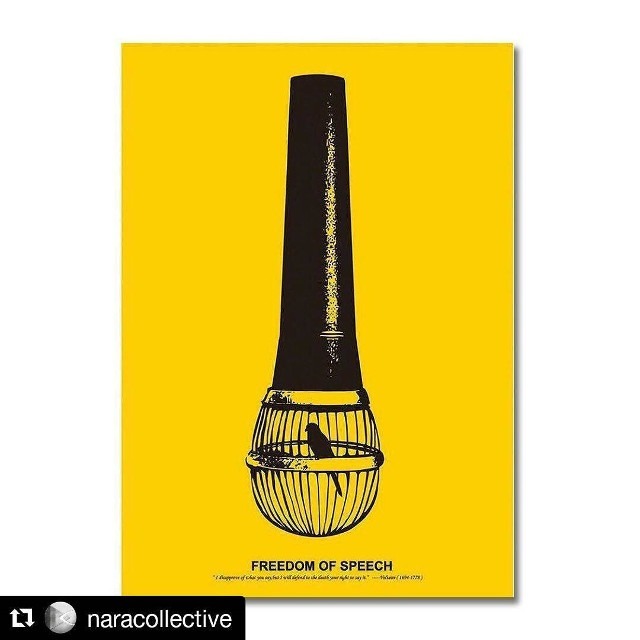

As such, the fight between free speech and censorship on the internet is ongoing. The US Congress has called on 8chan’s owner to testify about the hate speech appearing on the website. In New Zealand, the site has been totally banned.

Taking away hate speech platforms will not solve the problem completely, but it is another step towards reducing their poisonous reach.