Virtualisation is a method of maximising the use of resources in an IT infrastructure.

Computers, especially servers, are expensive pieces of hardware, and software licensing costs can also mount up. Therefore, leveraging the return on investment is critical.

While there are many benefits of virtualisation, there are also many challenges, both technical and organisational.

Congratulations! You are now the proud owner - as an example for this article - of a new Dell Power Edge R6525 Server.

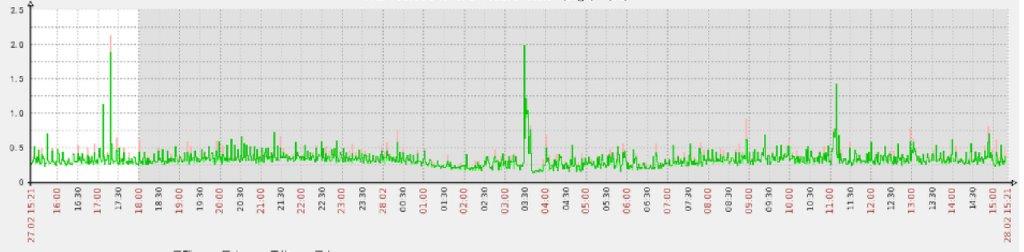

This machine costs CHF 15,000, and we are going to use it as our new web server. We configure it, install the operating system and software, and set it to work. Let’s take a look at how it is performing.

Here, it is only using a tiny percentage of the available resources (in this case, CPU). Not a good return on investment. What can we do to improve the situation?

But what do we mean by virtualisation?

At the moment, we are running one instance of an operating system on the server. However, with some clever software, we can run multiple virtual servers on the same physical server.

Each of these virtual servers thinks that it is fully independent, and will have its own connections to disks, networks, and other external resources. The virtual servers are isolated from each other and data is isolated on each machine.

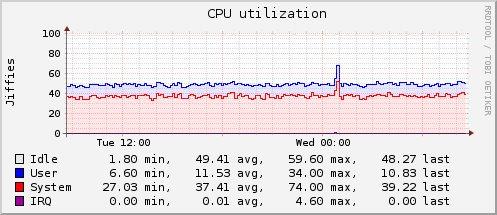

Now let us take a fresh look at the processor loads.

That is a far better result.

Today, servers, networks, disk arrays, PCIe cards, and even applications are virtualised. With the increasing demand for processor cycles combined with the exponential growth of data, extracting the best performance possible is more important than ever.

So, what was once our physical web server is now also a virtual web server, database server, middleware server, hosts the e-mail client, has various developer tools installed, and so on. A positive side-effect of this, known as server consolidation, is that the hardware costs and support of the old database server, middleware server, and others have now been made redundant, and the per-socket licensing costs have been cut as well.

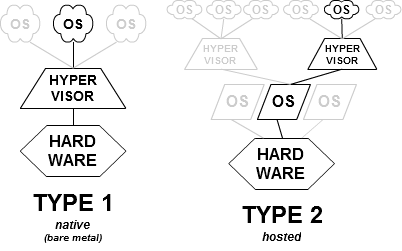

A virtualisation server may be one of 3 main types:

We will look at these each in turn.

Native or bare-metal hypervisors are classified as Type 1. These run directly on the host’s hardware to manage guest operating systems. Examples of this include IBM’s z/VM and the Power Hypervisor, Microsoft’s Hyper-V, Oracle VM Server, VMware ESXi, and Xen.

Hosted virtualisation is classified as Type 2. These hypervisors run on a conventional OS, with the guest operating systems running as a process on the host operating system. Examples include the Parallels Desktop for Mac, VirtualBox, QEMU, and VMware’s Workstation and Player.

Some virtualisation techniques span the two types, for example, KVM and FreeBSD bhyve are operating systems in their own rights (Type 2) with kernel modules that give bare-metal access to the VMs (Type 1).

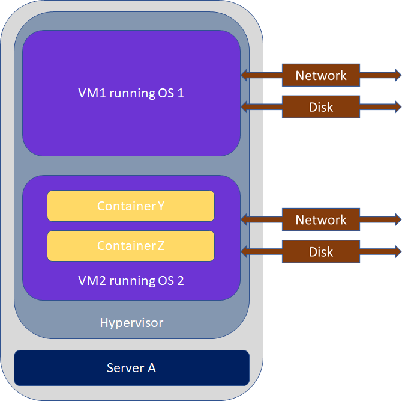

Containers are devices that limit the application’s access to the operating systems’ resources. So, a container may run only an application such as MS Word, not requiring an entire Windows machine. The application itself will not be affected by this constraint. However, every application in the container(s) will be dependent on the host operating system: for example, a Linux application cannot run on a container hosted by Windows.

How does virtualisation work?

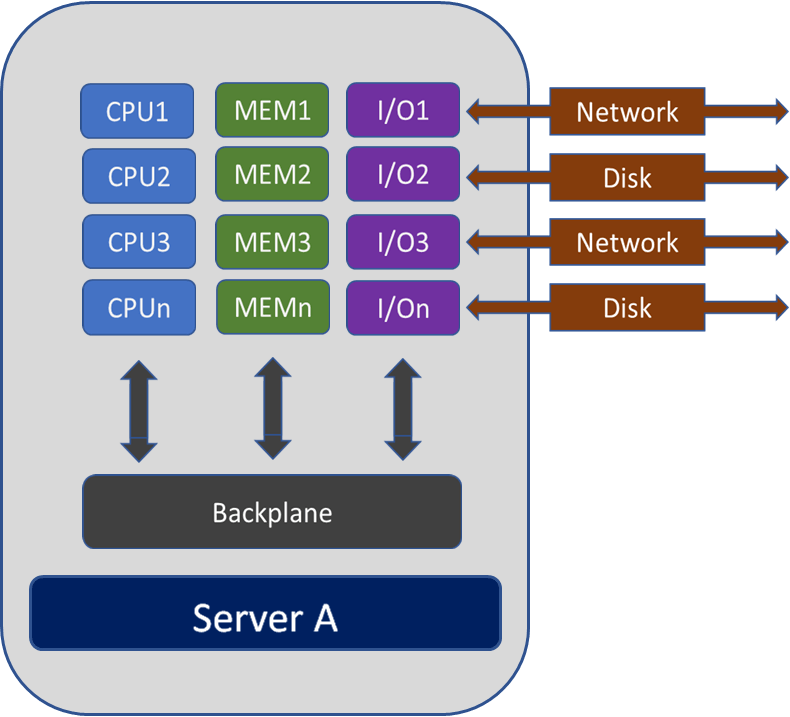

How does virtualisation work? Let us start by looking at the typical functional elements of a physical server.

The server is based around the CPUs (central processing units, processors, or the “brains” of the machine), memory (Random Access memory stores information as needed), and I/O (Input/Output, how the machine talks to the outside world). These are connected by a backplane, which allows the components to communicate. For example, if program “A” running on CPU1 needs to send a message to another computer, it will have the information passed via the backplane to one of the I/O cards.

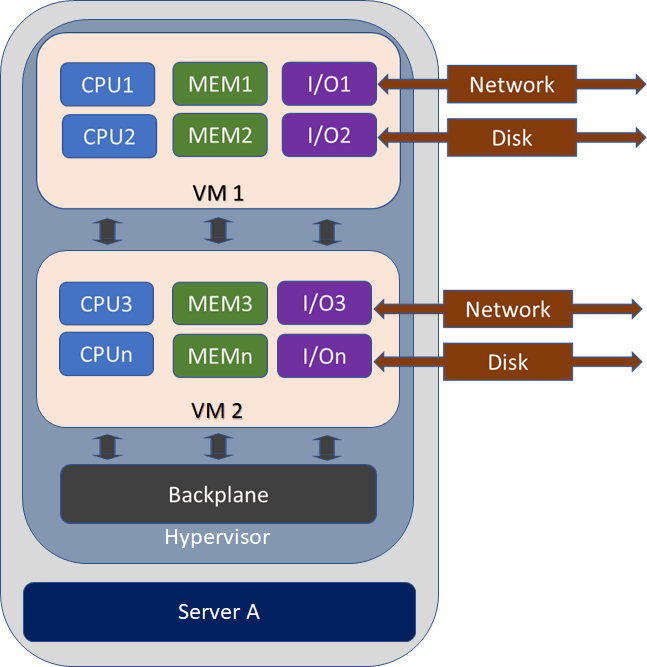

As this example is not virtualised, there is only one copy of the operating system installed. When we virtualise this machine, we use its resources – CPU, memory, and I/O – to allow multiple operating systems to run in parallel, a bit like this:

(Please note that the backplane is accessible to both VM1 and VM2.)

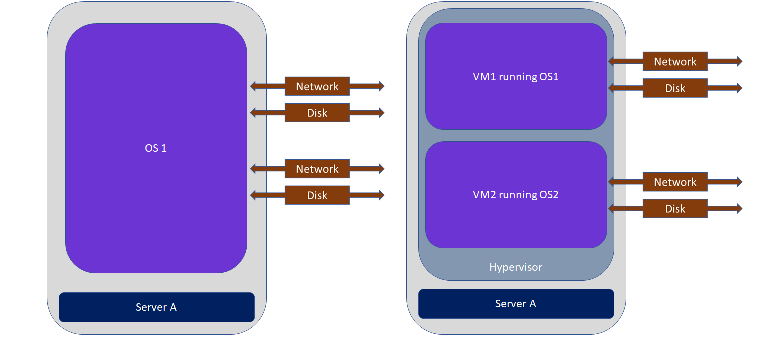

So here is the before-and-after of the non-virtualised and virtualised system, seen at the operating system level:

In the above example, I have evenly split the resources on the server: in reality, perhaps VM1 is running a database and needs three processors and more memory. However, most virtualisation systems also allow the dynamic allocation of resources. So if there is a month-end batch to run and VM1 needs more CPU, then we can temporarily reduce the CPUs present in VM2 and allocate them to VM1.

In the above example, I have evenly split the resources on the server: in reality, perhaps VM1 is running a database and needs three processors and more memory. However, most virtualisation systems also allow the dynamic allocation of resources. So if there is a month-end batch to run and VM1 needs more CPU, then we can temporarily reduce the CPUs present in VM2 and allocate them to VM1.

Containers will run on an existing operating system: so VM2, running OS2, has containers Y and Z:

This can all become overly complicated very rapidly. For any virtualised installation, it is necessary to have an in-depth planning stage. Metrics concerning system performance will have to be taken to give a baseline performance upon which the VMs can be based. A clear set of guidelines defining the standards to be implemented is also necessary. Finally, a good level of technical knowledge is needed by those implementing the systems.

In the next article, I compare different virtualisation offerings on the market.

Further reading:

Repository Software: a non-technical introduction by

Continuous Integration: a non-technical introduction by

Images:

Andrew Clandillon

First CPU graph: Star Wind Software